LLMOps: Your Organization’s Accelerator to the Future

In the fast-evolving world of artificial intelligence, LLMOps (Large Language Model Operations) has emerged as a critical framework for managing and optimizing large language models (LLMs). These advanced AI systems—like GPT-4 and Google’s BERT—are revolutionizing industries by enabling tasks such as automated content creation, decision support, and customer service.

To fully unlock the potential of these models, organizations need LLMOps to ensure seamless integration, optimize performance, and maintain reliability. As generative AI becomes a key driver of innovation, mastering LLMOps is essential for staying ahead in the competitive AI landscape.

Why LLMOps is Essential

LLMOps is a tailored discipline designed to address the specific challenges posed by large language models. Unlike traditional machine learning models, LLMs require specialized workflows due to their size, complexity, and unique operational needs.

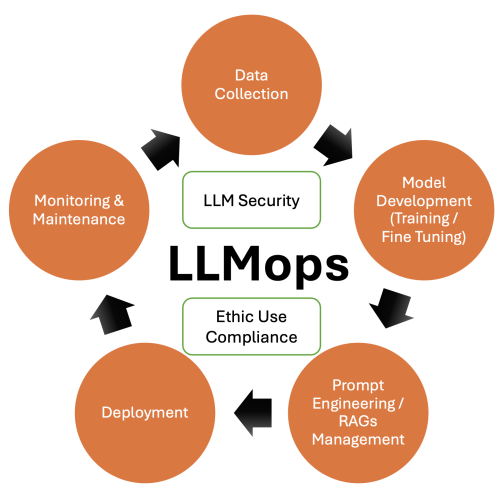

Core Responsibilities of LLMOps

Prompt Engineering and Optimization: At the heart of LLM usage lies prompt engineering—crafting, testing, and iterating on effective prompts that guide model behavior. This involves managing different prompt versions, ensuring reproducibility, and using tools like LangChain or W&B Prompts to optimize outputs.

Model Fine-Tuning and Customization: Fine-tuning allows organizations to adapt pre-trained models like GPT-4 for domain-specific tasks. Techniques such as retrieval-augmented generation (RAG) and reinforcement learning from human feedback (RLHF) ensure that LLMs provide accurate, contextual, and actionable outputs.

Deployment and Scalability: Deploying LLMs efficiently requires seamless integration with existing systems, whether on cloud platforms, on-premises, or at the edge. LLMOps oversees infrastructure management, ensuring scalability and cost optimization while maintaining performance.

Continuous Monitoring and Maintenance: Monitoring LLM performance is essential to prevent issues like model drift, hallucinations, or outdated knowledge. LLMOps teams implement robust observability frameworks to track metrics like accuracy, latency, and token usage, ensuring the model remains effective over time.

Security and Compliance: As LLMs process sensitive information, LLMOps enforces strict security protocols, including encryption, access control, and compliance with regulations like GDPR and CCPA.

LLMOps, MLOps and AIops

LLMOps vs. MLOps

While MLOps encompasses the entire lifecycle of machine learning models, LLMOps specializes in managing the unique demands of large language models. These include high computational costs, dependency on APIs, and the need for advanced evaluation techniques like BLEU and ROUGE metrics for text-based outputs.

LLMOps vs. AIops

AIops, with its broader focus on AI operations, incorporates LLMOps as a subset. While AIops may handle a variety of AI systems, LLMOps zeroes in on the operational requirements of language models, ensuring they integrate seamlessly with generative AI workflows.

The Strategic Role of LLMOps in Generative AI

As generative AI becomes a game-changer for industries, LLMOps provides the foundation for its successful adoption. LLMs, while central to GenAI solutions, require extensive operational support to unlock their full potential.

Driving Business Efficiency

By automating repetitive tasks, streamlining decision-making processes, and enhancing customer experiences, LLMOps significantly boosts organizational productivity. For example, LLMs can draft documents, generate code, or even handle customer queries, freeing up human resources for higher-value tasks.

Future-Proofing AI Investments

LLMOps ensures that organizations remain adaptable to changing business needs and technological advances. This includes handling API updates, integrating new data sources, and retraining models as needed to keep outputs relevant and impactful.

Supporting Collaboration Across Teams

LLMOps acts as a bridge between data scientists, DevOps engineers, and business stakeholders. This collaboration fosters innovation, ensures alignment with business objectives, and accelerates time-to-market for AI solutions.

The Strategic Role of LLMOps in Generative AI

To maximize the benefits of LLMOps, organizations should adopt these best practices:

Adopt Open-Source Tools: Leverage platforms like Hugging Face Transformers or LangChain for model fine-tuning and prompt management.

Build Scalable Infrastructure: Invest in GPUs or cloud-based solutions to support the computational demands of LLMs.

Enhance Monitoring Frameworks: Use advanced observability tools to track performance metrics and detect anomalies in real-time.

Ensure Ethical AI Usage: Mitigate biases in training data and establish mechanisms for gathering human feedback to improve model fairness.

Invest in Training: Develop internal expertise in LLMOps to empower teams to manage and optimize models effectively.

So, LLMops!

In an era defined by AI-driven innovation, LLMOps stands out as a critical discipline for managing the complexities of large language models. By bridging the gap between technical operations and business strategy, LLMOps enables organizations to unlock the full potential of generative AI.

With capabilities like fine-tuning, monitoring, and integration, LLMOps ensures that LLMs remain reliable, efficient, and impactful. As businesses increasingly rely on these models to drive growth and innovation, investing in LLMOps is no longer optional—it’s essential for staying competitive in the AI era.